What You Didn't Realize About Deepseek Is Powerful - But Very Simple

페이지 정보

본문

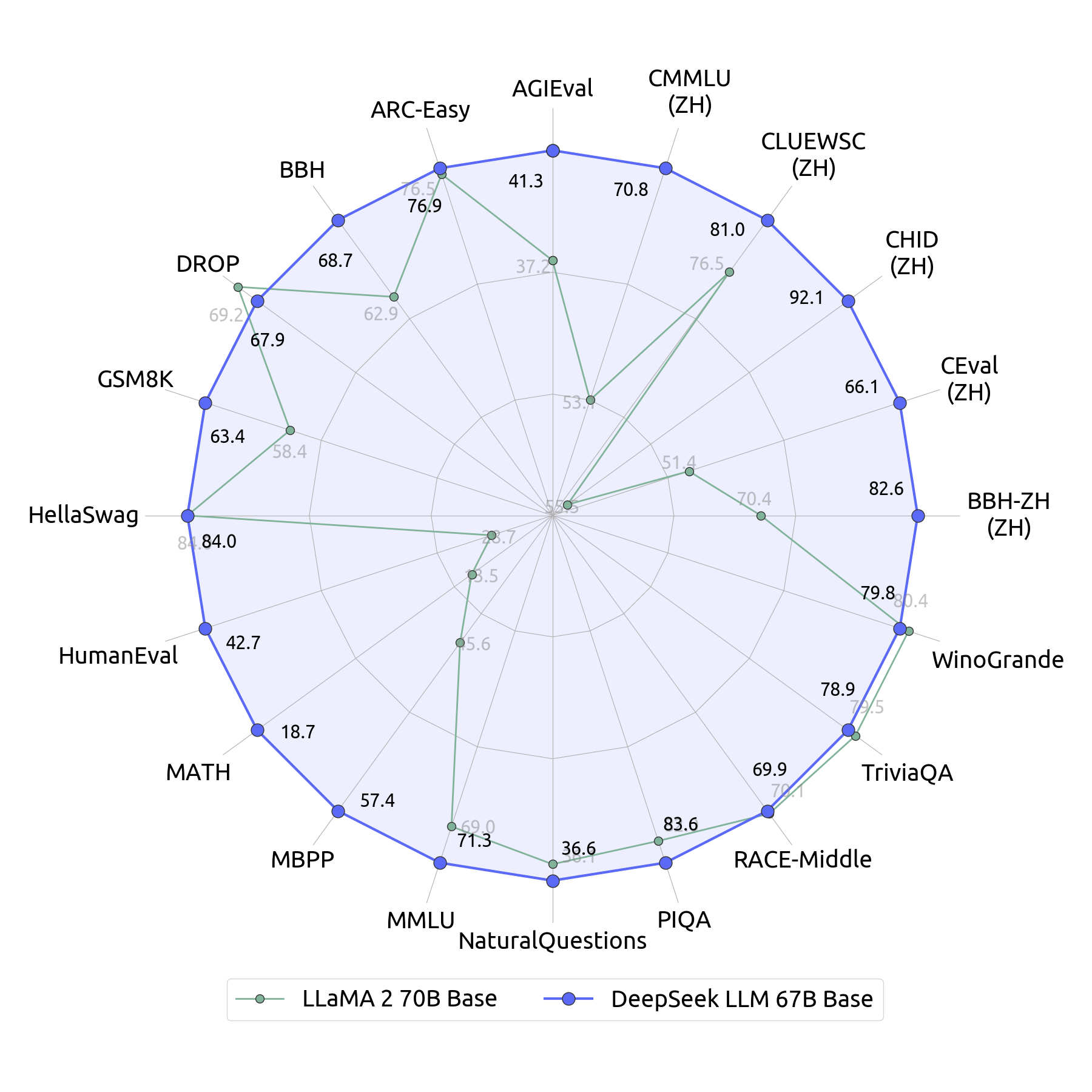

DeepSeek differs from other language models in that it's a group of open-source large language models that excel at language comprehension and versatile utility. 1. The bottom models were initialized from corresponding intermediate checkpoints after pretraining on 4.2T tokens (not the version at the tip of pretraining), then pretrained additional for 6T tokens, then context-extended to 128K context length. Reinforcement learning (RL): The reward model was a course of reward mannequin (PRM) educated from Base in keeping with the Math-Shepherd technique. Fine-tune DeepSeek-V3 on "a small amount of long Chain of Thought data to fantastic-tune the mannequin because the preliminary RL actor". One of the best hypothesis the authors have is that humans developed to think about relatively simple things, like following a scent within the ocean (and then, ultimately, on land) and this type of labor favored a cognitive system that would take in an enormous quantity of sensory data and compile it in a massively parallel way (e.g, how we convert all the information from our senses into representations we will then focus consideration on) then make a small number of choices at a a lot slower rate. Turning small fashions into reasoning fashions: "To equip extra environment friendly smaller fashions with reasoning capabilities like DeepSeek-R1, we instantly tremendous-tuned open-supply models like Qwen, and Llama using the 800k samples curated with DeepSeek-R1," DeepSeek write.

DeepSeek differs from other language models in that it's a group of open-source large language models that excel at language comprehension and versatile utility. 1. The bottom models were initialized from corresponding intermediate checkpoints after pretraining on 4.2T tokens (not the version at the tip of pretraining), then pretrained additional for 6T tokens, then context-extended to 128K context length. Reinforcement learning (RL): The reward model was a course of reward mannequin (PRM) educated from Base in keeping with the Math-Shepherd technique. Fine-tune DeepSeek-V3 on "a small amount of long Chain of Thought data to fantastic-tune the mannequin because the preliminary RL actor". One of the best hypothesis the authors have is that humans developed to think about relatively simple things, like following a scent within the ocean (and then, ultimately, on land) and this type of labor favored a cognitive system that would take in an enormous quantity of sensory data and compile it in a massively parallel way (e.g, how we convert all the information from our senses into representations we will then focus consideration on) then make a small number of choices at a a lot slower rate. Turning small fashions into reasoning fashions: "To equip extra environment friendly smaller fashions with reasoning capabilities like DeepSeek-R1, we instantly tremendous-tuned open-supply models like Qwen, and Llama using the 800k samples curated with DeepSeek-R1," DeepSeek write.

Often, I find myself prompting Claude like I’d immediate an extremely excessive-context, affected person, unattainable-to-offend colleague - in different words, I’m blunt, short, and converse in quite a lot of shorthand. Why this matters - a whole lot of notions of control in AI policy get harder if you want fewer than one million samples to transform any model into a ‘thinker’: The most underhyped part of this release is the demonstration that you could take models not skilled in any sort of major RL paradigm (e.g, Llama-70b) and convert them into highly effective reasoning fashions using just 800k samples from a powerful reasoner. GPTQ fashions for GPU inference, with multiple quantisation parameter options. This repo contains GPTQ model files for free deepseek's Deepseek Coder 6.7B Instruct. This repo contains AWQ mannequin files for DeepSeek's Deepseek Coder 6.7B Instruct. In response, the Italian information protection authority is in search of extra data on DeepSeek's assortment and use of non-public information and the United States National Security Council introduced that it had began a nationwide safety evaluation. In particular, it wanted to know what personal information is collected, from which sources, for what functions, on what authorized basis and whether or not it is saved in China.

Often, I find myself prompting Claude like I’d immediate an extremely excessive-context, affected person, unattainable-to-offend colleague - in different words, I’m blunt, short, and converse in quite a lot of shorthand. Why this matters - a whole lot of notions of control in AI policy get harder if you want fewer than one million samples to transform any model into a ‘thinker’: The most underhyped part of this release is the demonstration that you could take models not skilled in any sort of major RL paradigm (e.g, Llama-70b) and convert them into highly effective reasoning fashions using just 800k samples from a powerful reasoner. GPTQ fashions for GPU inference, with multiple quantisation parameter options. This repo contains GPTQ model files for free deepseek's Deepseek Coder 6.7B Instruct. This repo contains AWQ mannequin files for DeepSeek's Deepseek Coder 6.7B Instruct. In response, the Italian information protection authority is in search of extra data on DeepSeek's assortment and use of non-public information and the United States National Security Council introduced that it had began a nationwide safety evaluation. In particular, it wanted to know what personal information is collected, from which sources, for what functions, on what authorized basis and whether or not it is saved in China.

Detecting anomalies in data is crucial for figuring out fraud, network intrusions, or gear failures. Alibaba’s Qwen mannequin is the world’s best open weight code mannequin (Import AI 392) - and they achieved this by means of a mix of algorithmic insights and entry to knowledge (5.5 trillion prime quality code/math ones). DeepSeek-R1-Zero, a mannequin trained through giant-scale reinforcement learning (RL) with out supervised tremendous-tuning (SFT) as a preliminary step, demonstrated remarkable performance on reasoning. In 2020, High-Flyer established Fire-Flyer I, a supercomputer that focuses on AI deep studying. DeepSeek’s system: The system known as Fire-Flyer 2 and is a hardware and software program system for doing massive-scale AI coaching. A whole lot of doing effectively at textual content adventure video games appears to require us to construct some fairly rich conceptual representations of the world we’re making an attempt to navigate by means of the medium of text. For these not terminally on twitter, a whole lot of people who find themselves massively professional AI progress and anti-AI regulation fly beneath the flag of ‘e/acc’ (quick for ‘effective accelerationism’). It really works effectively: "We offered 10 human raters with 130 random short clips (of lengths 1.6 seconds and 3.2 seconds) of our simulation aspect by facet with the true recreation.

Outside the convention middle, the screens transitioned to live footage of the human and the robot and the sport. Resurrection logs: They started as an idiosyncratic type of model capability exploration, then turned a tradition among most experimentalists, then turned into a de facto convention. Models developed for this challenge should be portable as nicely - model sizes can’t exceed 50 million parameters. A Chinese lab has created what seems to be one of the powerful "open" AI models thus far. With that in mind, I discovered it interesting to learn up on the results of the 3rd workshop on Maritime Computer Vision (MaCVi) 2025, and was particularly interested to see Chinese teams successful 3 out of its 5 challenges. Why this matters - asymmetric warfare comes to the ocean: "Overall, the challenges presented at MaCVi 2025 featured robust entries throughout the board, pushing the boundaries of what is possible in maritime imaginative and prescient in a number of completely different features," the authors write.

If you beloved this short article and you would like to receive far more details concerning ديب سيك kindly stop by the site.

- 이전글What's The Job Market For Locksmith Near Me House Professionals Like? 25.02.01

- 다음글7 Simple Changes That Will Make The Difference With Your Bi-Fold Door Hinges 25.02.01

댓글목록

등록된 댓글이 없습니다.